Chaos and Variance in (Simulations of) Galaxy Formation

During yesterday’s viva voce examination a paper came up that I missed when it came out last year. It’s by Keller et al. called Chaos and Variance in Galaxy Formation. The abstract reads:

The evolution of galaxies is governed by equations with chaotic solutions: gravity and compressible hydrodynamics. While this micro-scale chaos and stochasticity has been well studied, it is poorly understood how it couples to macro-scale properties examined in simulations of galaxy formation. In this paper, we show how perturbations introduced by floating-point roundoff, random number generators, and seemingly trivial differences in algorithmic behaviour can produce non-trivial differences in star formation histories, circumgalactic medium (CGM) properties, and the distribution of stellar mass. We examine the importance of stochasticity due to discreteness noise, variations in merger timings and how self-regulation moderates the effects of this stochasticity. We show that chaotic variations in stellar mass can grow until halted by feedback-driven self-regulation or gas exhaustion. We also find that galaxy mergers are critical points from which large (as much as a factor of 2) variations in quantities such as the galaxy stellar mass can grow. These variations can grow and persist for more than a Gyr before regressing towards the mean. These results show that detailed comparisons of simulations require serious consideration of the magnitude of effects compared to run-to-run chaotic variation, and may significantly complicate interpreting the impact of different physical models. Understanding the results of simulations requires us to understand that the process of simulation is not a mapping of an infinitesimal point in configuration space to another, final infinitesimal point. Instead, simulations map a point in a space of possible initial conditions points to a volume of possible final states.

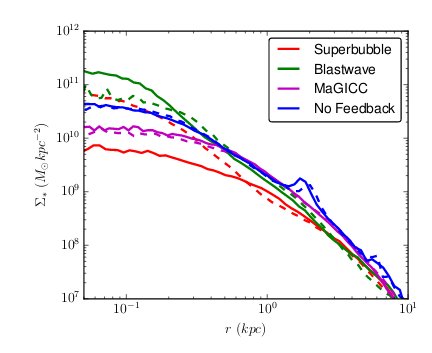

(The highlighting is mine.) I find this analysis pretty scary, actually, as it shows that numerical effects (including just running the code on different processors) can have an enormous impact on the outputs of these simulations. Here’s Figure 14 for example:

This shows the predicted stellar surface mass density in a number of simulations: the outputs vary by more than an order of magnitude!

This paper underlines an important question which I have worried about before, and could paraphrase as “Do we trust N-body simulations too much?”. The use of numerical codes in cosmology is widespread and there’s no question that they have driven the subject forward in many ways, not least because they can generate “mock” galaxy catalogues in order to help plan survey strategies. However, I’ve always been concerned that there is a tendency to trust these calculations too much. On the one hand there’s the question of small-scale resolution and on the other there’s the finite size of the computational volume. And there are other complications in between too. In other words, simulations are approximate. To some extent our ability to extract information from surveys will therefore be limited by the inaccuracy of our calculation of the theoretical predictions.

Anyway, the paper gives us quite a few things to think about and I think it might provoke a bit of discussion, which is why I mentioned it here – i.e. to encourage folk to read and give their opinions.

The use of the word “simulation” always makes me smile. Being a crossword nut I spend far too much time looking in dictionaries but one often finds quite amusing things there. This is how the Oxford English Dictionary defines SIMULATION:

1.

a. The action or practice of simulating, with intent to deceive; false pretence, deceitful profession.

b. Tendency to assume a form resembling that of something else; unconscious imitation.

2. A false assumption or display, a surface resemblance or imitation, of something.

3. The technique of imitating the behaviour of some situation or process (whether economic, military, mechanical, etc.) by means of a suitably analogous situation or apparatus, esp. for the purpose of study or personnel training.

So it’s only the third entry that gives the intended meaning. This is worth bearing in mind if you prefer old-fashioned analytical theory!

In football, of course, you can even get sent off for simulation…

Follow @telescoper

September 11, 2019 at 3:52 pm

I’m surprised this isn’t picked up by diagnostic outputs during the simulation. For instance, I’d expect the imprecision to lead to significant changes in (what should be) conserved quantities.

September 11, 2019 at 3:54 pm

Even standard SPH codes don’t conserve energy or entropy all that well. When you try to include`subgrid physics’ with phenomenological rules then they get even worse…

September 11, 2019 at 4:31 pm

I appreciate standard conserved quantities might not be right! But there are surely some tests of numerical drift being done?

September 11, 2019 at 4:37 pm

One would hope so…

September 11, 2019 at 3:55 pm

The future evolution of cosmological simulations was actually one of the main points of yesterday’s thesis!

September 11, 2019 at 4:37 pm

As a matter of fact we spent a while talking about z=-0.992

September 11, 2019 at 9:42 pm

There’s been recent work by Mishra, Fjordholm and co on similar issues with grid codes when there’s shock-turbulence interaction. They’re able to “save” the situation by using ensemble approaches (on top of the grid simulations: horribly expensive): it may be that similar approaches would work in SPH. arxiv 1906.02536 is the most recent of theirs that I know (at only 25 pages of measure theory before the 15 pages of simulation results it’s also one of the more readable…).

Their work isn’t coming from astro/cosmology but does link to the Uncertainty Quantification field, which seems to be putting a lot of effort into understanding what “trusting a simulation” should actually mean. The statistics is currently over my head, but may appeal to you.

September 12, 2019 at 2:50 am

Common in the field of galaxy evolution to find theorists with extraordinary faith in the universes they’ve created. Observers with evidence flatly contradicting their assertions are then dismissed as idiots (which many of us are, to be fair, but the evidence lies elsewhere…)

Sometimes theorists detest their findings so much that they spend decades trying to wriggle out of conclusions that are in fact genuinely interesting. Many years ago it was found, to the horror of many, that a top-heavy stellar IMF is required to account for high-redshift starburst galaxies such that their existence does not violate other known properties of the Universe. Last year, three independent observational studies concluded that starbursts do have top-heavy IMFs, likely because of the influence of cosmic rays associated with intense starbursts.

September 16, 2019 at 10:49 pm

In the 1980s, when chaos was a hot topic, I remember buying a short highly mathematical paperback book, with quite a large page size, establishing that the Navier-Stokes equations exhibited sensitive dependence on initial conditions – ie, neighbouring trajectories in phase space diverged with time, and diverged exponentially. Trouble at t’mill !

September 17, 2019 at 11:30 am

Last Saturday I looked round the first cotton or flax mill to have an iron frame, a building which is the precursor of all skyscrapers. It is also the first mill to have been powered by a steam engine rather than a waterwheel. It is in Ditherington, a northern suburb of Shrewsbury, and is currently being restored; at present you can get inside it only on open days when there is a guided tour, although the museum next to it is open every weekend. More remarkable industrial heritage in Shropshire!

September 24, 2019 at 8:39 am

What is temperature of ”Chaos” ?

From which quantum particles the ”Chaos” is consist ?

How from ”Chaos” the ”Order” arises ?

(atom, molecule, cell, body . . . . )

===

September 24, 2019 at 10:02 am

😐