I’ve just finished reading an interesting paper by Secrest et al. which has attracted some attention recently. It’s published in the Astrophysical Journal Letters but is also available on the arXiv here. I blogged about earlier work by some of these authors here.

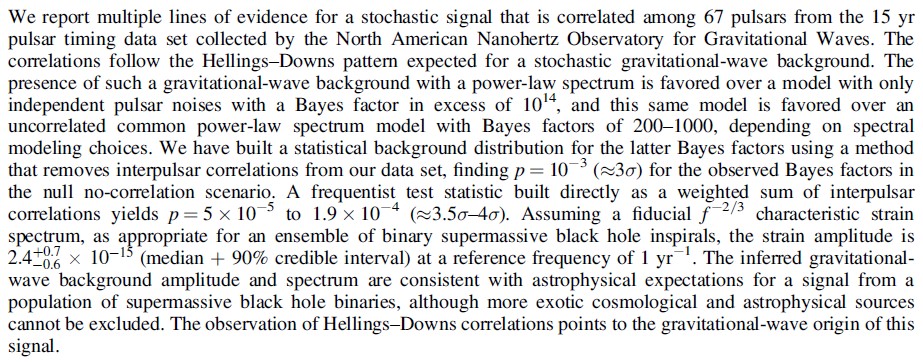

The abstract of the current paper is:

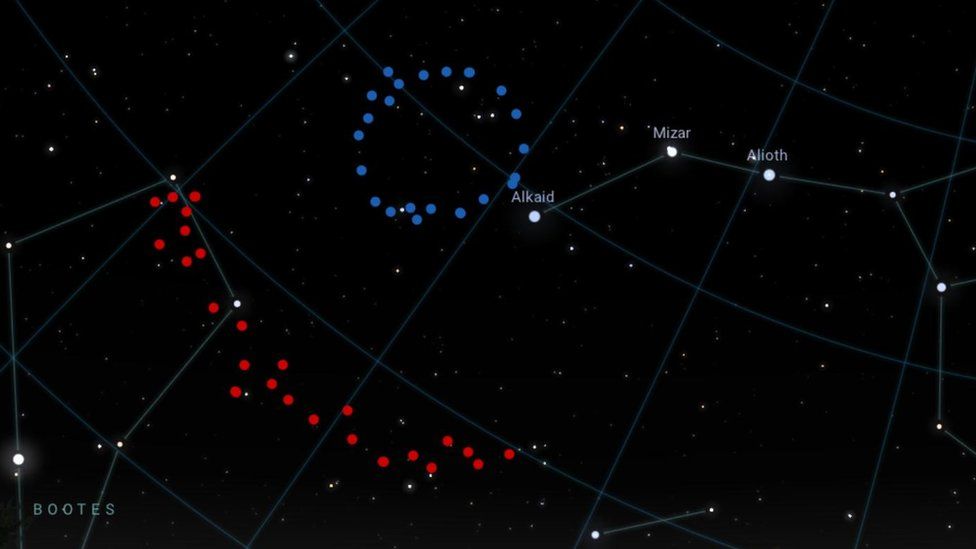

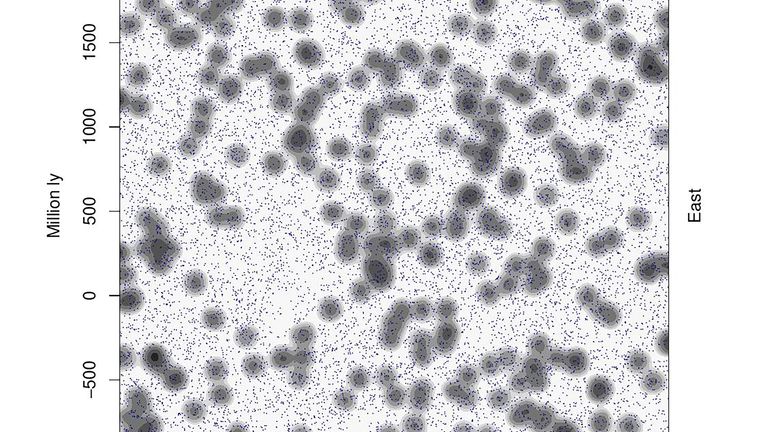

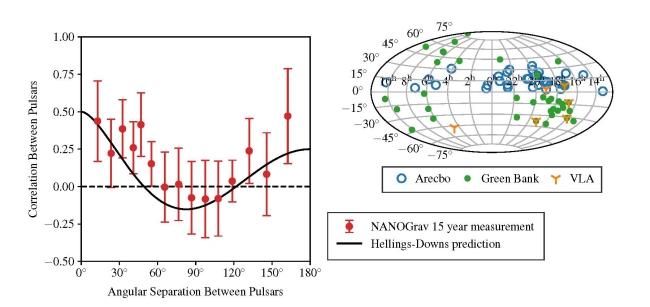

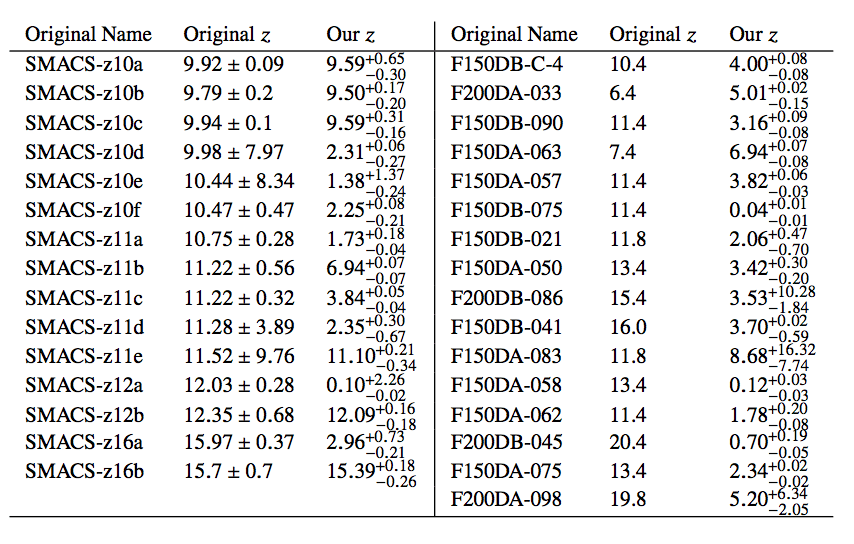

We present the first joint analysis of catalogs of radio galaxies and quasars to determine if their sky distribution is consistent with the standard ΛCDM model of cosmology. This model is based on the cosmological principle, which asserts that the universe is statistically isotropic and homogeneous on large scales, so the observed dipole anisotropy in the cosmic microwave background (CMB) must be attributed to our local peculiar motion. We test the null hypothesis that there is a dipole anisotropy in the sky distribution of radio galaxies and quasars consistent with the motion inferred from the CMB, as is expected for cosmologically distant sources. Our two samples, constructed respectively from the NRAO VLA Sky Survey and the Wide-field Infrared Survey Explorer, are systematically independent and have no shared objects. Using a completely general statistic that accounts for correlation between the found dipole amplitude and its directional offset from the CMB dipole, the null hypothesis is independently rejected by the radio galaxy and quasar samples with p-value of 8.9×10−3 and 1.2×10−5, respectively, corresponding to 2.6σ and 4.4σ significance. The joint significance, using sample size-weighted Z-scores, is 5.1σ. We show that the radio galaxy and quasar dipoles are consistent with each other and find no evidence for any frequency dependence of the amplitude. The consistency of the two dipoles improves if we boost to the CMB frame assuming its dipole to be fully kinematic, suggesting that cosmologically distant radio galaxies and quasars may have an intrinsic anisotropy in this frame.

I can summarize the paper in the form of this well-worn meme:

My main reaction to the paper – apart from finding it interesting – is that if I were doing this I wouldn’t take the frequentist approach used by the authors as this doesn’t address the real question of whether the data prefer some alternative model over the standard cosmological model.

As was the case with a Nature piece I blogged about some time ago, this article focuses on the p-value, a frequentist concept that corresponds to the probability of obtaining a value at least as large as that obtained for a test statistic under a particular null hypothesis. To give an example, the null hypothesis might be that two variates are uncorrelated; the test statistic might be the sample correlation coefficient r obtained from a set of bivariate data. If the data were uncorrelated then r would have a known probability distribution, and if the value measured from the sample were such that its numerical value would be exceeded with a probability of 0.05 then the p-value (or significance level) is 0.05. This is usually called a ‘2σ’ result because for Gaussian statistics a variable has a probability of 95% of lying within 2σ of the mean value.

Anyway, whatever the null hypothesis happens to be, you can see that the way a frequentist would proceed would be to calculate what the distribution of measurements would be if it were true. If the actual measurement is deemed to be unlikely (say that it is so high that only 1% of measurements would turn out that large under the null hypothesis) then you reject the null, in this case with a “level of significance” of 1%. If you don’t reject it then you tacitly accept it unless and until another experiment does persuade you to shift your allegiance.

But the p-value merely specifies the probability that you would reject the null-hypothesis if it were correct. This is what you would call making a Type I error. It says nothing at all about the probability that the null hypothesis is actually a correct description of the data. To make that sort of statement you would need to specify an alternative distribution, calculate the distribution based on it, and hence determine the statistical power of the test, i.e. the probability that you would actually reject the null hypothesis when it is incorrect. To fail to reject the null hypothesis when it’s actually incorrect is to make a Type II error.

If all this stuff about p-values, significance, power and Type I and Type II errors seems a bit bizarre, I think that’s because it is. In fact I feel so strongly about this that if I had my way I’d ban p-values altogether…

This is not an objection to the value of the p-value chosen, and whether this is 0.005 rather than 0.05 or, , a 5σ standard (which translates to about 0.000001! While it is true that this would throw out a lot of flaky ‘two-sigma’ results, it doesn’t alter the basic problem which is that the frequentist approach to hypothesis testing is intrinsically confusing compared to the logically clearer Bayesian approach. In particular, most of the time the p-value is an answer to a question which is quite different from that which a scientist would actually want to ask, which is what the data have to say about the probability of a specific hypothesis being true or sometimes whether the data imply one hypothesis more strongly than another. I’ve banged on about Bayesian methods quite enough on this blog so I won’t repeat the arguments here, except that such approaches focus on the probability of a hypothesis being right given the data, rather than on properties that the data might have given the hypothesis.

Not that it’s always easy to implement the (better) Bayesian approach. It’s especially difficult when the data are affected by complicated noise statistics and selection effects, and/or when it is difficult to formulate a hypothesis test rigorously because one does not have a clear alternative hypothesis in mind. That’s probably why many scientists prefer to accept the limitations of the frequentist approach than tackle the admittedly very challenging problems of going Bayesian.

But having indulged in that methodological rant, I certainly have an open mind about departures from isotropy on large scales. The correct scientific approach is now to reanalyze the data used in this paper to see if the result presented stands up, which it very well might.