Time for a lengthy and somewhat provocative guest post on the subject of the interpretation of quantum mechanics!

–o–

Galileo advocated the heliocentric system in a socratic dialogue. Following the lifting of the Copenhagen view that quantum mechanics should not be interpreted, here is a dialogue about a way of looking at it that promotes progress and matches Einstein’s scepticism that God plays dice. It is embarrassing that we can predict properties of the electron to one part in a billion but we cannot predict its motion in an inhomogeneous magnetic field in apparatus nearly 100 years old. It is tragic that nobody is trying to predict it, because the successes of quantum theory in combination with its strangeness and 20th century metaphysics have led us to excuse its shortcomings. The speakers are Neo, a modern physicist who works in a different area, and Nino, a 19th century physicist who went to sleep in 1900 and recently awoke. – Anton Garrett

Nino: The ultra-violet catastrophe – what about that? We were stuck with infinity when we integrated the amount of radiation emitted by an object over all wavelengths.

Neo: The radiation curve fell off at shorter wavelengths. We explained it with something called quantum theory.

Nino: That’s wonderful. Tell me about it.

Neo: I will, but there are some situations in which quantum theory doesn’t predict what will happen deterministically – it predicts only the probabilities of the various outcomes that are possible. For example, here is what we call a Stern-Gerlach apparatus, which generates a spatially inhomogeneous magnetic field.i It is placed in a vacuum and atoms of a certain type are shot through it. The outermost electron in each atom will set off either this detector, labelled ‘A’, or that detector, labelled ‘B.’ All the electrons coming out of detector B (say) have identical quantum description, but if we put them through another Stern-Gerlach apparatus oriented differently then some will set off one of the two detectors associated with it, and some will set off the other.

Nino: Probabilistic prediction is an improvement on my 19th century physics, which couldn’t predict anything at all about the outcome. I presume that physicists in your era are now looking for a theory that predicts what happens each time you put a particle through successive Stern-Gerlach apparatuses.

Neo: Actually we are not. Physicists generally think that quantum theory is the end of the line.

Nino: In that case they’ve been hypnotised by it! If quantum mechanics can’t answer where the next electron will go then we should look beyond it and seek a better theory that can. It would give the probabilities generated by quantum theory as averages, conditioned on not controlling the variables of the new theory more finely than quantum mechanics specifies.

Neo: They are talked of as ‘hidden variables’ today, often hypothetically. But quantum theory is so strange that you can’t actually talk about which detector the atom goes through.

Nino: Nevertheless only one of the detectors goes off. If quantum theory cannot answer which then we should look for a better theory that can. Its variables are manifestly not hidden, for I see their effect very clearly when two systems with identical quantum description behave differently. ‘Hidden variables’ is a loaded name. What you’ve not learned to do is control them. I suggest you call them shy variables.

Neo: Those who say quantum theory is the end of the line argue that the universe is not deterministic – genuinely random.

Nino: It is our theories which are deterministic or not. ‘Random’ is a word that makes our uncertainty about what a system will do sound like the system itself is uncertain. But how could you ever know that?

Neo: Certainly it is problematic to define randomness mathematically. Probability theory is the way to make inference about outcomes when we aren’t certain, and ‘probability’ should mean the same thing in quantum theory as anywhere else. But if you take the hidden variable path then be warned of what we found in the second half of the 20th century. Any hidden variables must be nonlocal.

Nino: How is that?

Neo: Suppose that the result of a measurement of a variable for a particle is determined by the value of a variable that is internal to the particle – a hidden variable. I am being careful not to say that the particle ‘had’ the value of the variable that was measured, which is a stronger statement. The result of the measurement tells us something about the value of its internal variable. Suppose that this particle is correlated with another – if, for example, the pair had zero combined angular momentum when previously they were in contact, and neither has subsequently interacted with anything else. The correlation now tells you something about the internal variable of the second particle. For situations like this a man called Bell derived an inequality; one cannot be more precise because of the generality about how the internal variables govern the outcome of measurements.ii But Bell’s inequality is violated by observations on many pairs of particles (as correctly predicted by quantum mechanics). The only physical assumption was that the result of a measurement on a particle is determined by the value of a variable internal to it – a locality assumption. So a measurement made on one particle alters what would have been seen if a measurement had been made on one of the other particles, which is the definition of nonlocality. Bell put it differently, but that’s the content of it.iii

Nino: Nonlocality is nothing new. It was known as “action at a distance” in Newton’s theory of gravity, several centuries ago.

Neo: But gravitational force falls off as the inverse square of distance. Nonlocal influences in Bell-type experiments are heedless of distance, and this has been confirmed experimentally.iv

Nino: In that case you’ll need a theory in which influence doesn’t decay with distance.

Neo: But if influence doesn’t decay with distance then everything influences everything else. So you can’t consider how a system works in isolation any more – an assumption which physicists depend on.

Nino: We should view the fact that it often is possible to make predictions by treating a system as isolated as a constraint on any nonlocal hidden variable theory. It is a very strong constraint, in fact.

Neo: An important further detail is that, in deriving Bell’s inequality, there has to be a choice of how to set up each apparatus, so that you can choose what question to ask each particle. For example, you can choose the orientation of each apparatus so as to measure any one component of the angular momentum of each particle.

Nino: Then Bell’s analysis can be adapted to verify that two people, who are being interrogated in adjacent rooms from a given list of questions, are in clandestine contact in coordinating their responses, beyond having merely pre-agreed their answers to questions on the list. In that case you have a different channel – if they have sharper ears than their interrogators and can hear through the wall – but the nonlocality follows simply from the data analysis, not the physics of the channel.

Neo: In that situation, although the people being interrogated can communicate between the rooms in a way that is hidden from their interrogators, the interrogators in the two rooms cannot exploit this channel to communicate between each other, because the only way they can infer that communication is going on is by getting together to compare their sets of answers. Correspondingly, you cannot use pre-prepared particle pairs to infer the orientation of one detector by varying the orientation of the second detector and looking at the results of particle measurements at that second detector alone. In fact there are general no-signalling theorems associated with the quantum level of description.v There are also more striking verifications of nonlocality using correlated particle pairs,vi and with trios of correlated particles.vii

Nino: Again you can apply the analysis to test for clandestine contact between persons interrogated in separate rooms. Let me explain why I would always search for the physics of the communication channel between the particles, the hidden variables. In my century we saw that tiny particles suspended in water, just visible under our microscopes, jiggle around. We were spurred to find the reason – the particles were being jostled by smaller ones still, which proved to be the smallest unit you can reach by subdividing matter using chemistry: atoms. Upon the resulting atomic theory you have built quantum mechanics. Since then you haven’t found hidden variables underneath quantum mechanics in nearly 100 years. You suggest they aren’t there to be found but essentially nobody is looking, so that would be a self-fulfilling prophecy. If the non-determinists had been heeded about Brownian motion – and there were some in my time, influenced by philosophers – then the 21st century would still be stuck in the pre-atomic era. If one widget of a production line fails under test but the next widget passes, you wouldn’t say there was no reason; you’d revise your view that the production process was uniform and look for variability in it, so that if you learn how to deal with it you can make consistently good widgets.

Neo: But production lines aren’t based on quantum processes!

Nino: But I’m not wedded to quantum mechanics! I am making a point of logic, not physics. Quantum mechanics has answered some questions that my generation couldn’t and therefore superseded the theories of my time, so why shouldn’t a later generation than yours supersede quantum mechanics and answer questions that you couldn’t? It is scientific suicide for physicists to refuse to ask a question about the physical world, such as what happens next time I put a particle through successive Stern-Gerlach apparatuses. You say you are a physicist but the vocation of physicists is to seek to improve testable prediction. If you censor or anaesthetise yourself, you’ll be stuck up a dead end.

Neo: Not so fast! Nolocality isn’t the only radical thing. The order of measurements in a Bell setup is not Lorentz-invariant, so hidden variables would also have to be acausal – effect preceding cause.

Nino: What does ‘Lorentz-invariant’ mean, please?

Neo: This term came out of the theory that resolved your problems about aether. Electromagnetic radiation has wave properties but does not need a medium (‘aether’) to ‘do the waving’ – it propagates though a vacuum. And its speed relative to an observer is always the same. That matches intuition, because there is no preferred frame that is defined by a medium. But it has a counter-intuitive consequence, that velocities do not add linearly. If a light wave overtakes me at c (lightspeed) then a wave-chasing observer passing me at v still experiences the wave overtaking him at c, although our familiar linear rule for adding velocities predicts (c – v). That rule is actually an approximation, accurate at speeds much less than c, which turns out to be a universal speed limit. For the speed of light to be constant for co-moving observers then, because speed is distance divided by time, space and time must look different to these observers. In fact even the order of events can look different to two co-moving observers! The transformation rule for space and time is named after a man called Lorentz. That not just the speed of light but all physical laws should look the same for observers related by the Lorentz transformation is called the relativity principle. Its consequences were worked out by a man called Einstein. One of them is that mass is an extremely concentrated form of energy. That’s what fuels the sun.

Nino: He was obviously a brilliant physicist!

Neo: Yes, although he would have been shocked by Bell’s theorem.viii He asserted that God did not play diceix – determinism – but he also spoke negatively of nonlocality, as “spooky actions at a distance.” x Acausality would have shocked him even more. The order of measurements on the two particles in a Bell setup can be different for two co-moving observers. So an observer dashing through the laboratory might see the measurements done in reverse order than the experimenter logs. So at the hidden-variable level we cannot say which particle signals to which as a result of the measurements being made, and the hidden variables must be acausal. Acausality is also implied in ‘delayed choice’ experiments, as follows.xi Light – and, remarkably, all matter – has both particle properties (it can pass through a vacuum) and wave properties (diffraction), but only displays one property at a time. Suppose we decide, after a unit of light has passed a pair of Young’s slits, whether to measure the interference pattern – due to its diffractive properties as a wave propagating through both slits – or its position, which would tell us which single slit it traversed. According to quantum mechanics our choice seems to determine whether it traverses one slit or both, even though we made that choice after it had passed through! Acausality means that you would have to know the future in order to predict it, so this is a limit on prediction – confirming the intuition of quantum theorists that you can’t do better.

Nino: That will be so in acausal experimental situations, I accept. I believe the theory of the hidden variables will explain why time, unlike space, passes, and also entail a no-time-paradox condition.

Neo: Today we say that a theory must not admit closed time-like trajectories in space-time.

Nino: But a working hidden-variable theory would still give a reason why the system behaves as it did, even if we can’t access the information needed for prediction in situations inferred to be acausal. You can learn a lot from evolution equations even if you don’t know the initial conditions. And often the predictions of quantum theory are compatible with locality and causality, and in those situations the hidden variables might predict the outcome of a measurement exactly, outdoing quantum theory.

Neo: It also turned out that some elements of the quantum formalism do not correspond to things that can be measured experimentally. That was new in physics and forced physicists to think about interpretation. If differing interpretations give the same testable predictions, how do we choose between them?

Nino: Metaphysics then enters and it may differ among physicists, leading to differing schools of interpretation. But non-physical quantities have entered the equations of a theory before. A potential appears in the equations of Newtonian gravity and electromagnetism, but only differences in potential correspond to something physical.

Neo: That invariance, greatly generalised, lies behind the ‘gauge’ theories of my era. These are descriptions of further fundamental forces, conforming to the relativity principle that physics must look the same to co-moving observers related by the Lorentz transformation. That includes quantum descriptions, of course.xii It turned out that atoms have their positive charge in a nucleus contributing most of the mass of an atom, which is orbited by much lighter negatively charged particles called electrons – different numbers of electrons for different chemical elements. Further forces must exist to hold the positively charged particles in the nucleus together against their mutual electrical repulsion. These further forces are not familiar in everyday life, so they must fall off with distance much faster than the inverse square law of electromagnetism and gravity. Mass ‘feels’ gravity and charge feels electromagnetic forces, and there are analogues of these properties for the intranuclear forces, which are also felt by other more exotic particles not involved in chemistry. We have a unified quantum description of the intranuclear forces combined with electromagnetism that transforms according to the relativity principle, which we call the standard model, but we have not managed to incorporate gravity yet.

Nino: But this is still a quantum theory, still non-deterministic?

Neo: Ultimately, yes. But it gives a fit to experiment that is better than one part in a thousand million for certain properties of the electron – which it does predict deterministically.xiii That is the limit of experimental accuracy in my era, and nobody has found an error anywhere else.

Nino: That’s magnificent, and it says a huge amount for the progress of experimental physics too. But I still see no reason to move from can-do to can’t-do in aiming to outdo quantum theory.

Neo: Let me explain some quantum mechanics.xiv The variables we observe in regular mechanics, such as momentum, correspond to operators in quantum theory. The operators evolve deterministically according to the Hamiltonian of the system; waves are just free-space solutions. When you measure a variable you get one of its eigenvalues, which are real-valued because the operators are Hermitian. Quantum mechanics gives a probability distribution over the eigenspectrum. After the measurement, the system’s quantum description is given by the corresponding eigenfunction. Unless the system was already described by that eigenfunction before the measurement, its quantum description changes. That is a key difference from classical mechanics, in which you can in principle observe a system without disturbing it. Such a change (‘collapse’) makes it impossible to determine simultaneously the values of variables whose operators do not have coincident eigenfunctions – in other words, non-commuting operators. It has even been shown, using commuting subsets of the operators of a system in which members of differing sets do not commute, that simultaneous values of differing variables of a system cannot exist.xv

Nino: Does that result rest on any aspect of quantum theory?

Neo: Yes. Unlike Bell setups, which compare experiment with a locality criterion, neither of which have anything to do with quantum mechanics (it simply predicts what is seen), this further result is founded in quantum mechanics.

Nino: But I’m not committed to quantum mechanics! This result means that the hidden variables aren’t just the values of all the system variables, but comprise something deeper that somehow yields the system variables and is not merely equivalent to the set of them.

Neo: Some people suggest that reality is operator-valued and our perplexities arise because of our obstinate insistence on thinking in – and therefore trying to measure – scalars.

Nino: An operator is fully specified by its eigenvalues and eigenfunctions; it can be assembled as a sum over them, so if an operator is a real thing then they would be real things too. If a building is real, the bricks it is constructed from are real. But I still insist that, like any other physical theory, quantum theory should be regarded as provisional.

Neo: Quantum theory answered questions that earlier physics couldn’t, such as why electrons do not fall into the nucleus of an atom although opposite charges attract. They populate the eigenspectrum of the Hamiltonian for the Coulomb potential, starting at the lowest energy eigenfunction, with not more than two electrons per eigenfunction. When the electrons are disturbed they jump between eigenvalues, so that they cannot fall continuously. This jumping is responsible for atomic spectrum lines, whose vacuum wavelength is inversely proportional to the difference in energy of the eigenvalues. That is why quantum mechanics was accepted. But the difficulty of understanding it led scientists to take a view, championed by a senior physicist at Copenhagen, that quantum mechanics was merely a way of predicting measurements, rather than telling us how things really are.

Nino: That distinction is untestable even in classical mechanics. This is really about motivation. If you don’t believe that things ‘out there’ are real then you’ll have no motivation to think about them. The metaphysics beneath physics supposes that there is order in the world and that humans can comprehend it. Those assumptions were general in Europe when modern physics began. They came from the belief that the physical universe had an intelligent creator who put order in it, and that humans could comprehend this order because they had things in common with the creator (‘in his image’). You don’t need a religious faith to enter physics once it has got going and the patterns are made visible for all to see; but if ever the underlying metaphysics again becomes relevant, as it does when elements of the formalism do not correspond to things ‘out there,’ then such views will count. If you believe there is comprehensible and interesting order in the material universe then you will be more motivated to study it than others who suppose that differentiation is illusion and that all is one, i.e. the monist view held by some other cultures. So, in puzzling why people aren’t looking for those not-so-hidden variables, let me ask: Did the view that nature was underpinned by a divine creator get weaker where quantum theory emerged, in Europe, in the era before the Copenhagen view?

Neo: Religion was weakening during your time, as you surely noticed. That trend did continue.

Nino: I suggest the shift from optimism to defeatism about improving testable prediction is a reflection of that change in metaphysics reaching a tipping point. Culture also affects attitudes; did anything happen that induced pessimism between my era and the birth of quantum mechanics?

Neo: The most terrible war in history to that date took place in Europe. But we have moved on from the Copenhagen ‘interpretation’ which was a refusal of all questions about the formalism. That stance is acceptable provided it is seen as provisional, perhaps while the formalism is developed; but not as the last word. Physicists eventually worked out the standard model for the intranuclear forces in combination with electromagnetism. Bell’s theorem also catalysed further exploration of the weirdness of quantum mechanics, from the 1960s; let me explain. Before a measurement of one of its variables, a system is generally not in an eigenstate of the corresponding operator. This means that its quantum description must be written as a superposition of eigenstates. Although measurement discloses a single eigenvalue, remarkable things can be done by exploiting the superposition. We can gain information about whether the triggering mechanisms of light-activated bombs are good or dud triggers in an experiment in which light might be shone at each, according to a quantum mechanism.xvi (This does involve ‘sacrificing’ some of the working bombs, but without the quantum trick you would be completely stuck, because the bomb is booby-trapped to go off if you try to dismantle the trigger.) Even though we have electronic computers today that do millions of operations per second, many calculations are still too large to be done in feasible lengths of time, such as integer factorisation. We can now conceive of quantum computers that exploit the superposition to do many calculations in one, and drastically speed things up.xvii Communications can be made secure in the sense that eavesdropping cannot be concealed, as it would unavoidably change the quantum state of the communication system. The apparent reality of the superposition in quantum mechanics, together with the non-existence of definite values of variables in some circumstances, mean that it is unclear what in the quantum formalism is physical, and what is our knowledge about the physical – in philosophical language, what is ontological and what is epistemological. Some people even suggest that, ultimately, numbers – or at least information quantified as numbers – are physics.

Nino: That’s a woeful confusion – information about what? As for deeper explanation, when things get weird you either give up on going further – which no scientist should ever do – or you take the weirdness as a clue. Any no-hidden-variables claim must involve assumptions or axioms, because you can’t prove something is impossible without starting from assumptions. So you should expose and question those assumptions (such as locality and causality). Don’t accept any axioms that are intrinsic to quantum theory, because your aim is to go beyond quantum theory.

Neo: Some people, particularly in quantum computing, suggest that when a variable is measured in a situation in which quantum mechanics predicts the result probabilistically, the universe actually splits into many copies, with each of the possible values realised in one copy.xviii We exist in the copy in which the results were as we observed them, but in other universes copies of us saw other results.

Nino: We couldn’t observe the other universes, so this is metaphysics, and more fantastic than Jules Verne! What if the spectrum of possible outcomes includes a continuum of eigenvalues? Furthermore a measurement involves an interaction between the measuring apparatus and the system, so the apparatus and system could be considered as a joint system quantum-mechanically. There would be splitting into many worlds if you treat the system as quantum and the apparatus as classical, but no splitting if you treat them jointly as quantum. Nothing privileges a measuring apparatus, so physicists are free to analyse the situation in these two differing ways – but then they disagree about whether splitting has taken place. That’s inconsistent.

Neo: The two descriptions must be reconciled. As I said, a system left to itself evolves according to the Hamiltonian of the system. When one of its variables is measured, it undergoes an interaction with an apparatus that makes the measurement. The system finishes in an eigenstate of the operator corresponding to the variable measured, while the apparatus flags the corresponding eigenvalue. This scenario has to be reconciled with a joint quantum description of the system and apparatus, evolving according to their joint Hamiltonian including an interaction term. Reconciliation is needed in order to make contact with scalar values and prevent a regress problem, since the apparatus interacts quantum-mechanically with its immediate surroundings, and so on. Some people propose that the regress is truncated at the consciousness of the observer.

Nino: I thought vitalism was discredited once the soul was found to be massless, upon weighing dying creatures! The proposal you mention actually makes the regress problem worse, because if the result of a measurement is communicated to the experimenter via intermediaries who are conscious – who are aware that they pass on the result – then does it count only when it reaches the consciousness of the experimenter (an instant of time that is anyway problematic to define)? If so, why?

Neo: That’s a regress problem on the classical side of the chain, whereas I was talking about a regress problem on the quantum side. This suggests that the regress is terminated where the system is declared to have a classical description.xix I fully share your scepticism about the role of consciousness and free will. Human subjects have tried to mentally influence the outcomes of quantum measurements and it is not accepted that they can alter the distribution from the quantum prediction. Some people even propose that consciousness exists because matter itself is conscious and the brain is so complex that this property is manifest. But they never clarify what it means to say that atoms may have consciousness, even of a primitive sort.

Nino: Please explain how our regress terminates where we declare something classical.

Neo: For any measured eigenvalue of the system there are generally many degrees of freedom in the Hamiltonian of the apparatus, so that the density of states of the apparatus is high. (This is true even if the quantum states are physically large, as in low temperature quantum phenomena such as superconductivity.) Consider the apparatus variable that flags the result of the measurement. In the sum over states giving the expectation value of this variable, cross terms between quantum states of the apparatus corresponding to different eigenvalues of the system are very numerous. These cross terms are not generally correlated in amplitude or phase, so that they average out in the expectation value in accordance with the law of large numbers.xx Even if that is not the case they are usually washed out by interactions with the environment, because you cannot in practice isolate a system perfectly. This is called decoherence,xxi and nonlocality and those striking quantum-computer effects can only be seen when you prevent it.

Nino: Remarkable! But you still have only statistical prediction of which eigenvalue is observed.

Neo: Your deterministic viewpoint has been disparaged by some as an outmoded, clockwork view of the universe.

Nino: Just because I insist on asking where the next particle will go in a Stern-Gerlach apparatus? Determinism is a metaphysical assumption; no more or less. It inspires progress in physics, which any physicist should support. Let me return to nonlocality and acausality (which is a kind of directional nonlocality with respect to time, rather than space). They imply that the physical universe is an indivisible whole at the fundamental level of the hidden variables. That is monist, but is distinct from religious monism because genuine structure exists in the hidden – or rather shy – variables.

Neo: Certainly space and time seem to be irrelevant to the hidden interactions between particles that follow from Bell tests. As I said, we have a successful quantum description of electromagnetic interactions and have combined it with the forces that hold the atomic nucleus together. In this description we regard the electromagnetic field itself as an operator-valued variable, according to the prescription of quantum theory. The next step would be to incorporate gravity. That would not be Newtonian gravity, which cannot be right because, unlike Maxwell’s equations, it only looks the same to co-moving observers who are related by the Galilean transform of space and time – itself only a low-speed approximation to the correct Lorentz transform. Einstein found a theory of gravity that transforms correctly, known as general relativity, and to which Newton’s theory is an approximation. Einstein’s view was that space and time were related to gravity differently than to the other forces, but a theory that is almost equivalent to his (predicting identically in all tests to date) has since emerged that is similar to electromagnetism – a gauge theory in which the field is coupled naturally to matter which is described quantum-mechanically.xxii Unlike electromagnetism, however, the gravitational field itself has not yet been successfully quantised, hindering the marrying of it to other forces so as to unify them all. Of course we demand a theory that takes account of both quantum effects and relativistic gravity, for any theory that neglects quantum effects becomes increasingly inaccurate where these are significant – typically on the small scale inside atoms – while any theory that neglects relativistic gravitational effects becomes increasingly inaccurate where they are significant – typically on large scales where matter is gathered into dense massive astronomical bodies. Not even light can escape from some of these bodies – and, because the speed of light is a universal speed limit, nor can anything else. Quantum and gravitational effects are both large if you look at the universe far enough back in time, because we have learned that the universe was once very small and very dense. So a complete theory is indispensable for cosmologists who seek to study origins. The preferred program for quantum gravity today is known as string theory. But it has a deeply complicated structure and is infeasible to test experimentally, rendering progress slow.

Nino: But it’s still not a complete theory if it’s a quantum theory. Please say more about that very small dense stage of the universe which presumably expanded to give what we see today.

Neo: We believe the early part of the expansion underwent a great boost, known as inflation, which explains how the universe is unexpectedly smooth on the largest scale today and is also not dominated by gravity. Everything in the observed universe was, in effect, enormously diluted. Issues of causality also arise. But the mechanism for inflation is conjectural, and inflation raises other questions.

Nino: Unexpected large-scale smoothness sounds to me like a manifestation of nonlocality. Furthermore the hidden variables are acausal. Perhaps you cannot do without them at such extreme densities and temperatures. Then you wouldn’t need to invoke inflation.

Neo: We believe that inflation took place after the ‘Planck’ era in which a full theory of quantum theory of gravity is indispensible for accuracy. In that case our present understanding is adequate to describe the inflationary epoch.

Nino: You are considering the entire universe, yet you cannot predict which detector goes off next when consecutive particles having identical quantum description are fired through a Stern-Gerlach apparatus. Perhaps you should walk before you run. Then your problems in unifying the fundamental forces and applying the resulting theory to the entire universe might vanish.

Neo: That’s ironic – the older generation exhorting the younger to revolution! To finish, what would you say to my generation of physicists?

Nino: It is magnificent that you can predict properties of the electron to nine decimal places, but that makes it more embarrassing that you cannot tell something as basic as which way a silver atom will pass through an inhomogeneous magnetic field, according to its outermost electron. That incapability should be an itch inside your oyster shell. Seek a theory which predicts the outcome when systems having identical quantum specification behave differently. Regard all strange outworkings of quantum mechanics as information about the hidden variables. Purported no-hidden-variables theorems that are consistent with quantum mechanics must contain extra assumptions or axioms, so put such theorems to work for you by ensuring that your research violates those assumptions. Ponder how to reconcile the success of much prediction upon treating systems as isolated with the nonlocality and acausality visible in Bell tests. Don’t let anything put you off because, barring a lucky experimental anomaly, only seekers find. By doing that you become part of a great project.

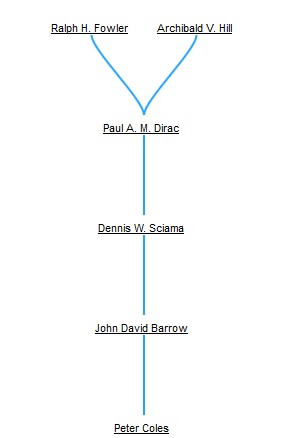

Anthony Garrett has a PhD in physics (Cambridge University, 1984) and has held postdoctoral research contracts in the physics departments of Cambridge, Sydney and Glasgow Universities. He is Managing Editor of Scitext Cambridge (www.scitext.com), an editing service for scientific documents.

i Gerlach, W. & Stern, O., “Das magnetische Moment des Silberatoms”, Zeitschrift für Physik 9, 353-355 (1922).

ii Bell, J.S., “On the Einstein Podolsky Rosen paradox”, Physics 1, 195-200 (1964).

iiiGarrett, A.J.M., “Bell’s theorem and Bayes’ theorem”, Foundations of Physics 20, 1475-1512 (1990).

iv The most rigorous test of Bell’s theorem to date is: Giustina, M., Mech, A., Ramelow, S., Wittmann, B., Kofler, J., Beyer, J., Lita, A., Calkins, B., Gerrits, T., Nam, S.-W., Ursin R. & Zeilinger, A., “Bell violation using entangled photons without the fair-sampling assumption”, Nature 497, 227-230 (2013). For a test of the 3-particle case, see: Bouwmeester, D., Pan, J.-W., Daniell, M., Weinfurter, H. & Zeilinger, A., “Observation of three-photon Greenberger-Horne-Zeilinger entanglement”, Physical Review Letters 82, 1345-1349 (1999).

v Bussey, P.J., “Communication and non-communication in Einstein-Rosen experiments”, Physics Letters A123, 1-3 (1987).

viMermin, N.D., “Quantum mysteries refined”, American Journal of Physics 62, 880-887 (1994). This is a very clear tutorial recasting of: Hardy, L., “Nonlocality for two particles without inequalities for almost all entangled states”, Physical Review Letters 71, 1665-1668 (1993).

vii Mermin, N.D., “Quantum mysteries revisited”, American Journal of Physics 58, 731-734 (1990). This is a tutorial recasting of the ‘GHZ’ analysis: Greenberger, D.M., Horne, M.A. & Zeilinger, A., 1989, “Going beyond Bell’s theorem”, in Bell’s Theorem, Quantum Theory and Conceptions of the Universe, ed. M. Kafatos (Kluwer Academic, Dordrecht, Netherlands), p.69-72.

viii Einstein, A., Podolsky, B. & Rosen, N., “Can quantum-mechanical description of physical reality be considered complete?”, Physical Review 47, 777-780 (1935).

ixEinstein, A., Letter to Max Born, 4th December 1926. English translation in: The Born-Einstein Letters 1916-1955 (MacMillan Press, Basingstoke, UK), 2005, p.88.

x Einstein, A., Letter to Max Born, 3rd March 1947. Ibid., p.155.

xiWheeler, J.A., 1978, “The ‘past’ and the ‘delayed-choice’ double-slit experiment”, in Mathematical Foundations of Quantum Theory, ed. A.R. Marlow (Academic Press, New York, USA), p.9-48. Experimental verification: Jacques, V., Wu, E., Grosshans, F., Treusshart, F., Grangier, P., Aspect, A. & Roch, J.-F., “Experimental Realization of Wheeler’s Delayed-Choice Gedanken Experiment”, Science 315, 966-968 (2007).

xii Weinberg, S., 2005, The Quantum Theory of Fields, vols. 1-3 (Cambridge University Press, UK).

Hanneke, D., Fogwell, S. & Gabrielse, G., “New measurement of the electron magnetic moment and the fine-structure constant”, Physical Review Letters 100, 120801 (2008); 4pp.

xiii Dirac, P.A.M., 1958, The Principles of Quantum Mechanics (4th ed., Oxford University Press, UK).

xiv Mermin, N.D., “Simple unified form for the major no-hidden-variables theorems”, Physical Review Letters 65, 3373-3376 (1990); Mermin, N.D., “Hidden variables and the two theorems of John Bell”, Reviews of Modern Physics 65, 803-815 (1993). This is a simpler version of the ‘Kochen-Specker’ analysis: Kochen, S. &

xvSpecker, E.P., “The problem of hidden variables in quantum mechanics”, Journal of Mathematics and Mechanics, 17, 59-87 (1967).

xvi Elitzur, A.C. & Vaidman, L., “Quantum mechanical interaction-free measurements”, Foundations of Physics 23, 987-997 (1993).

xvii Mermin, N.D., 2007, Quantum Computer Science (Cambridge University Press, UK).

xviii DeWitt, B.S. & Graham, N. (eds.), 1973, The Many-Worlds Interpretation of Quantum Mechanics (Princeton University Press, New Jersey, USA). The idea is due to Hugh Everett III, whose work is reproduced in this book.

xixThis immediately resolves the well known Schrödinger’s cat paradox.

xxVan Kampen, N.G., “Ten theorems about quantum mechanical measurements”, Physica A153, 97-113 (1988).

xxi Zurek, W.H., “Decoherence and the transition from quantum to classical”, Physics Today 44, 36-44 (1991).

xxii Lasenby, A., Doran, C. & Gull, S., “Gravity, gauge theories and geometric algebra”, Philosophical Transactions of the Royal Society A356, 487-582 (1998). This paper derives and studies gravity as a gauge theory using the mathematical language of Clifford algebra, which is the extension of complex analysis to higher dimensions than 2. Just as complex analysis is more efficient than vector analysis in 2 dimensions, Clifford algebra is superior to conventional vector/tensor analysis in higher dimensions. (Quaternions are the 3-dimensional version, a generalisation that Nino would doubtless appreciate.) Nobody uses the Roman numeral system any more for long division! This theory of gravity involves two fields that obey first-order differential equations with respect to space and time, in contrast to general relativity in which the metric tensor obeys second-order equations. These gauge fields derive from translational and rotational invariance and can be expressed with reference to a flat background spacetime (i.e., whenever coordinates are needed they can be Cartesian or some convenient transformation thereof). Presumably it is these two gauge fields, rather than the metric tensor, that should satisfy quantum (non-)commutation relations in a quantum theory of gravity.